SUID (Set owner User ID up on execution) is a special type of file permissions given to a file. Normally in Linux/Unix when a program runs, it inherits access permissions from the logged in user. SUID is defined as giving temporary permissions to a user to run a program/file with the permissions of the file owner rather that the user who is running it. In simple words users will get file owner’s permissions as well as their UID and GID when executing a file/program/command.

When we try to change our password we will use passwd command which is owned by root as shown below. This passwd command file will try to edit some system config files such as /etc/passwd, /etc/shadow etc when we try to change our password. These files cannot be opened or viewed by normal user only root user will have permissions. So if we try to remove SUID and give full permissions to this passwd command file it cannot open other files such as /etc/shadow file to update the changes and we will get permission denied error or some other error when tried to execute passwd command. So passwd command is set with SUID to give root user permissions to normal user so that it can update /etc/shadow and other files.

How can I setup SUID for a file?

SUID can be set in two ways

1) Symbolic way(s, Stands for Set) 2) Numerical/octal way(4)Use chmod command to set SUID on file: file1.txt

Symbolic way:

chmod u+s file1.txtHere owner permission execute bit is set to SUID with +s

Numerical way:

chmod 4750 file1.txtHere in 4750, 4 indicates SUID bitset, 7 for full permissions for owner, 5 for write and execute permissions for group, and no permissions for others.

How can I check if a file is set with SUID bit or not?

Use ls –l to check if the x in owner permissions field is replaced by s or S

For example: file1.txt listing before and after SUID set

Before SUID set:

ls -l

total 8 -rwxr--r-- 1 xyz xyzgroup 148 Dec 22 03:46 file1.txtAfter SUID set:

ls -l

total 8

-rwsr--r-- 1 xyz xyzgroup 148 Dec 22 03:46 file1.txt

Some FAQ’s related to SUID:A) Where is SUID used?

1) Where root login is required to execute some commands/programs/scripts.

2) Where you dont want to give credentials of a perticular user and but want to run some programs as the owner.

3) Where you dont want to use sudo command but want to give execute permission for a file/script etc.

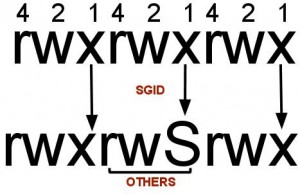

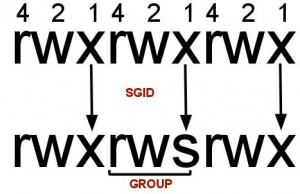

B) I am seeing “S” I.e. Capital “s” in the file permissions, what’s that?

After setting SUID to a file/folder if you see ‘S’ in the file permission area that indicates that the file/folder does not have executable permissions for that user on that particular file/folder.

For example see below example

chmod u+s file1.txt

ls -l -rwSrwxr-x 1 surendra surendra 0 Dec 27 11:24 file1.txtIf you want to convert this S to s then add executable permissions to this file as show below

chmod u+x file1.txt ls -l -rwsrwxr-x 1 surendra surendra 0 Dec 5 11:24 file1.txtyou should see a smaller ‘s’ in the executable permission position now.

C) How can I find all the SUID set files in Linux/Unix.

find / -perm +4000

The above find command will check all the files which is set with SUID bit(4000).

D) Can I set SUID for folders?

Yes, you can if its required(you should remember one thing, that Linux treats everything as a file)

E) What is SUID numerical value?

It has the value 4 for SUID.